NVIDIA at CES 2026: Jensen Huang Unveils Vera Rubin and the Next Architecture of AI

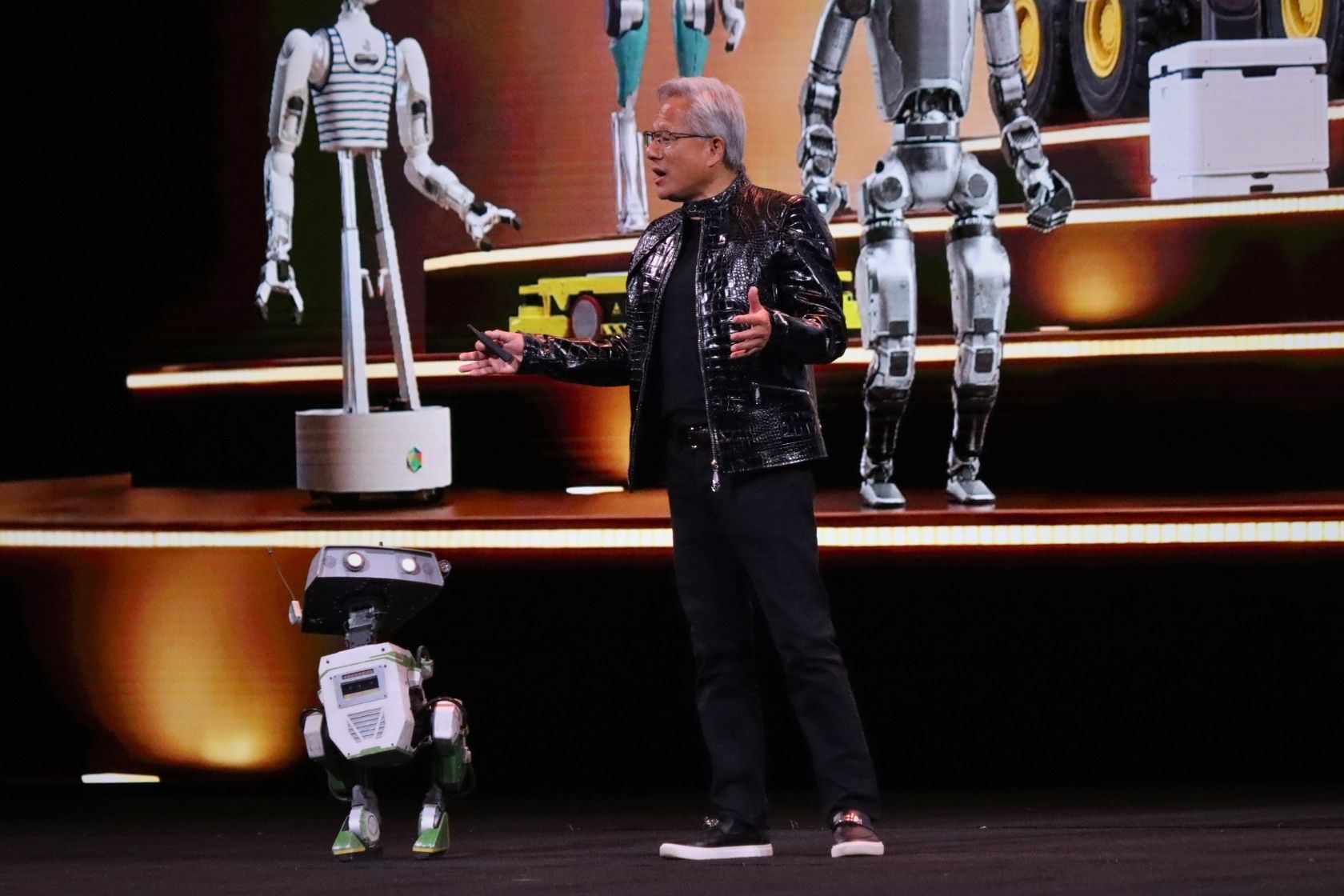

At CES 2026, NVIDIA Founder and CEO Jensen Huang presented a sweeping vision for the future of artificial intelligence, one defined not by single-shot answers, but by reasoning, agentic systems, and a radically re-engineered computing stack.

From open frontier models to the debut of the Vera Rubin AI supercomputer, NVIDIA’s announcements signal a structural shift in how intelligence is built, deployed, and scaled across every industry.

Key NVIDIA Announcements at CES 2026

- Vera Rubin AI supercomputer platform enters full production

- New reasoning and agentic AI model architecture enabled by test-time scaling

- Expansion of open-source frontier AI models and libraries

- Breakthrough AI networking with Spectrum-X Ethernet and silicon photonics

- BlueField-4 DPUs redefine data center virtualization, security, and AI isolation

- AI-native memory and storage architecture designed for long-context inference

From Language Models to Reasoning Intelligence

Artificial intelligence has crossed an inflection point.

What began as large language models generating one-shot responses has evolved into thinking systems, AI capable of reasoning, planning, using tools, and adapting dynamically at inference time.

“Inference is no longer a one-shot answer,” Jensen Huang said. “Inference is now a thinking process.”

This shift is powered by test-time scaling, where models are allowed to think longer, generating more tokens and applying structured reasoning to improve outcomes. Combined with reinforcement learning and chain-of-thought techniques, AI systems can now solve problems they were never explicitly trained to handle.

Each phase of AI development, pre-training, post-training, and test-time reasoning, demands exponentially more compute, driving a fundamental rethink of AI infrastructure.

Agentic AI: The Foundation of Future Applications

One of the most consequential developments highlighted at CES was the rise of agentic AI systems.

These systems can:

- Perform research and information retrieval

- Use tools and APIs

- Plan multi-step workflows

- Simulate outcomes before acting

“Agentic systems are going to really take off from here,” Huang noted.

Unlike traditional models, agentic AI is:

- Multi-modal (text, speech, video, 3D, biology)

- Multi-model (orchestrating multiple AI models at once)

- Multi-cloud and hybrid by design, spanning edge, enterprise, and cloud environments

This architecture now represents the default framework for future software applications, not an exception.

Open Models Reach the Frontier

Another defining theme of NVIDIA’s CES 2026 keynote was the rapid rise of open models.

In 2024 and 2025, open-source reasoning models surprised the industry, catalyzing global participation in AI innovation. Startups, enterprises, researchers, and governments alike are adopting open models to avoid concentration of intelligence in a few closed systems.

“How is it possible that digital intelligence should leave anyone behind?”

While open models currently trail closed frontier systems by roughly six months, the gap continues to narrow with each release cycle. Downloads of open models have surged as organizations seek to build custom, domain-specific intelligence.

NVIDIA’s Open AI Stack: NeMo, BioNeMo, and Physics NeMo

To support open innovation at scale, NVIDIA has built a comprehensive open-source AI stack, including:

NeMo

A full lifecycle framework for training, fine-tuning, guarding, and deploying large language and agentic models.

BioNeMo

AI models and tools for protein generation, digital biology, and cellular representation.

Physics NeMo

Foundation models that understand physical laws, enabling breakthroughs in weather forecasting, climate modeling, and scientific simulation.

Together, these platforms allow organizations to build AI that is deeply customized yet always aligned with frontier capabilities. As Huang said:

“Your AI should be completely customizable on one hand, and always at the frontier on the other.”

The Vera Rubin Platform Explained

Vera CPU + Rubin GPU

At the center of NVIDIA’s CES announcements is Vera Rubin, the company’s next-generation AI supercomputer platform, now in full production.

- Custom Vera CPU with double the performance per watt of prior generations

- Rubin GPU delivering up to 5× peak inference performance and 3.5× training performance

- Over 220 trillion transistors per rack

This leap was achieved through what NVIDIA calls extreme co-design, re-engineering every chip, interconnect, and system layer simultaneously to overcome the slowing of Moore’s Law.

“If we don’t innovate across every chip, across the entire system, all at the same time, it is impossible to keep up,” Huang said.

NVLink, Switching, and Networking at Planetary Scale

Vera Rubin introduces:

- 400 Gbps switching, allowing every GPU to communicate with every other GPU simultaneously

- Spectrum-X Ethernet with silicon photonics, enabling AI-optimized networking at unprecedented scale

- Throughput equivalent to multiple times the global internet’s cross-sectional bandwidth

In large data centers, even a 10% networking efficiency gain can translate into billions of dollars in recovered performance, making AI networking a first-order economic concern.

Cooling, Efficiency, and the AI Factory

Despite delivering massive performance gains, Vera Rubin achieves:

- Full liquid cooling using 45°C hot water

- No need for chillers

- Dramatically improved energy efficiency

This design allows AI factories to scale sustainably even as power demands double.

Reinventing AI Memory and Storage

As reasoning models generate more tokens and maintain longer context windows, traditional memory architectures are no longer sufficient.

NVIDIA outlined a new approach to AI-native memory, designed to support:

- Long-term conversational context

- Lifelong AI memory

- Multi-user supercomputer sharing

“We want AI to stay with us our entire lives and remember every conversation we’ve ever had,” Huang said.

This shift requires moving beyond SQL-based storage toward semantic, AI-aware memory systems, tightly integrated with networking and compute.

Why This Matters for Enterprises and Startups

NVIDIA’s CES 2026 announcements have immediate implications:

- AI is now a platform, not an application

- Open models enable sovereign and enterprise-controlled intelligence

- Reasoning and agentic systems reduce the need for task-specific training

- Infrastructure decisions now determine competitive advantage

- Networking and memory are as critical as compute

Organizations that adapt early will shape how intelligence is deployed across industries. From healthcare and finance to robotics, climate science, and entertainment.

Frequently Asked Questions

What did NVIDIA announce at CES 2026?

NVIDIA unveiled the Vera Rubin AI platform, advances in agentic and reasoning AI, expanded open-source models, and major innovations in networking, memory, and data center architecture.

What is NVIDIA Vera Rubin?

Vera Rubin is NVIDIA’s next-generation AI supercomputer platform designed to scale training and inference for frontier AI models using co-designed CPU, GPU, networking, and cooling systems.

What is test-time scaling in AI?

Test-time scaling allows AI models to think longer at inference time—generating more tokens and reasoning steps to improve accuracy and problem-solving.

What are agentic AI systems?

Agentic systems are AI models capable of planning, using tools, conducting research, and breaking complex problems into steps, enabling adaptive intelligence.

About Golden Medina Services

Golden Medina Services provides professional media, editorial, and production coverage for technology, innovation, and global events. Our CES 2026 coverage focuses on the leaders, platforms, and ideas shaping the next generation of computing.